Novel Sensors for Robotics Applications: We are exploring how we can use emerging sensors in robotics applications. New sensors offer different tradeoffs and capabilities which provides opportunities for robotics usages. For example, we are working with Single Photon Avalanche Diode (SPAD) time of flight sensors which provide distance information in small, low-power packages. These sensors provide different information than more traditional ones: for example providing statistical distributions over an area, rather than detailed measurements.

Research Themes

Visualization Theory: Summarization, Uncertainty, ...: We are exploring very basic questions in how to present information with visualizations. We are examining the central concept of summarization to understand how people use summaries and what strategies can be used to create them. This leads to a broader concept of understanding how people use visualizations to ask and answer questions. We are trying to codify the process for creating effective visualizations to make it easier for designers.

Awareness of (and with) Robots: We are interested in how we can help human stakeholders (operators, observers, etc.) have appropriate understanding of robots and their situations. This requires us to design methods (such as visualizations) that help communicate robot state, environment, plans, and history to users. One aspect that we explore is using robots to provide viewpoints (move cameras) to help observe robots (or other aspects of the environments).

Shared Autonomy for Robotic Inspection: We are developing robot solutions to automate inspection, where a mobile robot with a set of sensors scans through a space. Such applications involve a human collaborator, to specify the task, to supervise operation, to assess the results, or to guide the robot through challenging aspects. We seek to develop systems that share control: automating as much as possible, but allowing for user contributions as necessary. This application project is driving techical work in motion synthesis, mobile sensing, and user experience.

Test Title: Test Body.

Video, Animation and Image Authoring: Our goal is to make it easier for people to create useable images and video. For example, we have developed methods for improving pictures and video as a post-process (e.g. removing shadows and stabilizing video). We have also worked on adapting imagery for use in new settings (e.g. image and video retargeting or automatic video editing) and making use of large image collections (e.g. intestingness detection or panorama finding).

Visualizing English Print: To drive our data science efforts, we took a specific application: working with English literature scholars to develop approaches to working with large collections of historical texts.

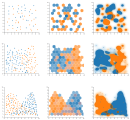

Perceptual Principles for Visualization: Understanding how people see can inform how we should design visualizations. We have been exploring how recent results in perception (e.g., ensemble encoding) can be exploited to create novel visualization designs, and how principles of perception can inform visualization designs.

Communicative Characters: We are working on better ways to synthesize human motions to make animated characters (both on screen and robots) that are better able to communicate. Generally, we focus on trying to make use of collections of examples (such as motion capture) to build models that allow us to generate novel movements, or to define models of communicative motions.

Usable VR and AR: Virtual Reality (VR) and Augmented Reality (AR) are interesting display devices that are becoming practical. We are exploring how to design VR and AR applications that can address application tasks, as well as to develop new mechanisms that will make these displays more useful across a broad range of applications.

Visualizing Comparisons for Data Science: Data interpretation tasks often involve making comparisons among the data, or can be thought of as comparisons. We are developing better visualization tools for performing comparisons for various data challenges, as well as to developing better methods for inventing new designs.

Interacting with Machine Learning: People interact with machine learning systems in many ways: they must build them, debug them, diagnose them, decide to trust them, gain insights on their data from them, etc. We are exploring this in both directions: How do we build machine learning tools into interactive data analysis in order to help people interpret large and complex data? How do we build interaction tools that can help people construct and diagnose machine learning models?